26 Nov 2014

If you ever woundered why your 10Gbit link on Proxmox node is used only by a few percent during the migration, so you came to the right place.

The main reason is the security measures taken to protect virtual machine memory during the migration. All volume of memory will be transmitted via secure tunnel and that penalizes the speed:

Nov 24 12:26:41 starting migration of VM 123 to node 'proxmox1' (10.0.1.1)

Nov 24 12:26:41 copying disk images

Nov 24 12:26:41 starting VM 123 on remote node 'proxmox1'

Nov 24 12:26:43 starting ssh migration tunnel

Nov 24 12:26:43 starting online/live migration on localhost:60000

Nov 24 12:26:43 migrate_set_speed: 8589934592

Nov 24 12:26:43 migrate_set_downtime: 0.1

Nov 24 12:26:45 migration status: active (transferred 133567908, remaining 930062336), total 1082789888)

Nov 24 12:26:47 migration status: active (transferred 273781779, remaining 788221952), total 1082789888)

...

Nov 24 12:26:58 migration status: active (transferred 1036346176, remaining 20889600), total 1082789888)

Nov 24 12:26:58 migration status: active (transferred 1059940218, remaining 11558912), total 1082789888)

Nov 24 12:26:59 migration speed: 64.00 MB/s - downtime 54 ms

Nov 24 12:26:59 migration status: completed

Nov 24 12:27:02 migration finished successfuly (duration 00:00:21)

TASK OK

If your configured your Proxmox cluster to use the dedicated network isolated from the public one so you may low down the security level

$ cat /etc/pve/datacenter.cfg

....

migration_unsecure: 1

This is it:

Nov 24 12:42:19 starting migration of VM 100 to node 'proxmox2' (10.0.1.2)

Nov 24 12:42:19 copying disk images

Nov 24 12:42:19 starting VM 100 on remote node 'proxmox2'

Nov 24 12:42:35 starting ssh migration tunnel

Nov 24 12:42:36 starting online/live migration on 10.0.1.2:60000

Nov 24 12:42:36 migrate_set_speed: 8589934592

Nov 24 12:42:36 migrate_set_downtime: 0.1

Nov 24 12:42:38 migration status: active (transferred 728684636, remaining 5655494656), total 6451433472)

Nov 24 12:42:40 migration status: active (transferred 1465523175, remaining 4865253376), total 6451433472)

....

Nov 24 12:42:55 migration status: active (transferred 7115710846, remaining 69742592), total 6451433472)

Nov 24 12:42:55 migration speed: 323.37 MB/s - downtime 262 ms

Nov 24 12:42:55 migration status: completed

Nov 24 12:42:58 migration finished successfuly (duration 00:00:39)

TASK OK

now you’re using all available bandwidth for migration, that also is very useful during migration heavy loaded instances.

24 Nov 2014

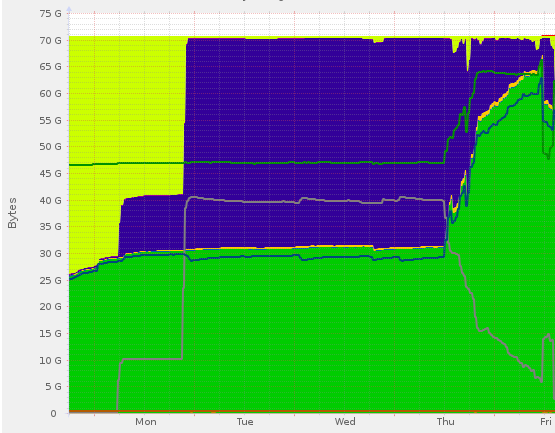

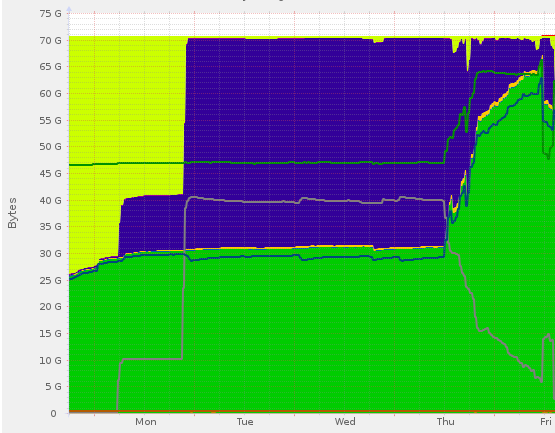

Today we discuss how to manage wisely your cache setting in Ceph (docs.ceph.com/en/latest/rbd/index.html). The type of cache discussed below is the user space implementation of the Ceph block device (i.e., librbd).

Suddenly after migration to Ceph we start observing the doubling of memory usage by every virtual machine.

After some research we found the configuration error in ceph.conf. The option rbd cache size describes the amount of cache reserved for every virtual disk, so the total amount of RAM reserved for cache is given by

total amount of ram = (number of virtual disks) X (rbd cache size)

After some rational reasoning of the kind - the standard hard drive usually possess 64M of cache - the final version of configuration will be the following

rbd cache = true

rbd cache size = 67108864 # (64MB)

rbd cache max dirty = 50331648 # (48MB)

rbd cache target dirty = 33554432 # (32MB)

rbd cache max dirty age = 2

rbd cache writethrough until flush = true

Now push the configuration to the virtual hosts

$ ceph-deploy --overwrite-conf config push host1 host2

To apply settings without virtual machine restart use KVM live migration. After the virtual machine is migrated from host1 to host2 the new process will start taking into account the modification to the ceph configuration.

If not sure about setting leave Ceph’s default values for amount of cache, simply enable it

rbd cache = true

rbd cache writethrough until flush = true

20 Nov 2014

STEP 1:

Choose a disk with the most optimal ratio IOPS/price. Check out the list of disks in order of IOPS (Input/Output Operations Per Second) performance: en.wikipedia.org/wiki/IOPS.

Quite often SSD disks are released with firmware bugs or with non-optimal configurations, so before putting the system in production checkout the latest version.

STEP 2:

Check that AHCI - Advanced Host Controller Interface is enabled and working:

$ sudo dmesg | grep -i ahci

ahci 0000:00:11.0: version 3.0

ahci 0000:00:11.0: irq 43 for MSI/MSI-X

ahci 0000:00:11.0: AHCI 0001.0200 32 slots 6 ports 3 Gbps 0x3f impl SATA mode

ahci 0000:00:11.0: flags: 64bit ncq sntf ilck pm led clo pmp pio slum part

scsi0 : ahci

scsi1 : ahci

scsi2 : ahci

Check whether your controller supports AHCI:

$ sudo lshw | grep -i ahci

product: 82801JI (ICH10 Family) SATA AHCI Controller

capabilities: storage msi pm ahci_1.0 bus_master cap_list emulated

configuration: driver=ahci latency=0

Quite often the AHCI is disabled in BIOS, in this case reboot and enable it.

I observed unstable behavior of disks without AHCI enabled and even the inability to execute TRIM correctly.

Identify the type of SATA modes available (for ex. SATA-II: 3Gbps gives the theoretical limit of speed 375MB/s)

$ sudo dmesg | grep SATA

ahci 0000:00:11.0: AHCI 0001.0200 32 slots 6 ports 3 Gbps 0x3f impl SATA mode

ata1: SATA max UDMA/133 abar m1024@0xfddffc00 port 0xfddffd00 irq 43

Check what is supported by disk

$ sudo hdparm -I /dev/sda | grep SATA

Transport: Serial, ATA8-AST, SATA 1.0a, SATA II Extensions, SATA Rev 2.5, SATA Rev 2.6, SATA Rev 3.0

The ideal would be any revision higher than SATA Rev 3.0 which guaranties the 6Gbps or higher speeds.

STEP 2.5: Pause

STEP 3:

Check that disk TRIM (wikipedia.org/wiki/Trim) works fine

$ sudo hdparm -I /dev/sda | grep -i trim

* Data Set Management TRIM supported (limit 8 blocks)

It is very important to have TRIM functioning. Without TRIM the disk speed will degrade with time due to the fact that the SSD will have to erase the cell before every write operation.

Let’s test it simply by executing fstrim

$ sudo fstrim -v /

/: 98147174400 bytes were trimmed

Lets test whether the TRIM is really doing what it should

$ sudo wget -O /tmp/test_trim.sh "https://sites.google.com/site/lightrush/random-1/checkiftrimonext4isenabledandworking/test_trim.sh?attredirects=0&d=1"

$ sudo chmod +x /tmp/test_trim.sh

$ sudo /tmp/test_trim.sh <tempfile> 50 /dev/sdX

If TRIM is properly working the result of the last command should be a bunch of zeros, thanks to Nicolay Doytchev for this script.

STEP 4:

Be sure to format disk in the SSD friendly file system: EXT4, F2FS, BTRFS, XFS

or any other from this list File systems optimized for flash memory.

If not, you’ll better migrate it to EXT4 at least with the help of this manual

Migrating a live system from ext3 to ext4 filesystem or consider the complete

re-installation of the operating system.

STEP 5:

Mount disk with correct parameters

$ cat /etc/fstab

/dev/pve/data /var/lib/vz ext4 discard,noatime,commit=600,defaults 0 1

Check whether the disk is identified as non rotational

$ sudo for f in /sys/block/sd?/queue/rotational; do printf "$f is "; cat $f; done

/sys/block/sda/queue/rotational is 0

If you see 1 on SSD, that means there are some problem with kernel or AHCI

The next is to check that the scheduler option is selected on deadline for our brand new SSD drive

$ sudo for f in /sys/block/sd?/queue/scheduler; do printf "$f is "; cat $f; done

/sys/block/sda/queue/scheduler is noop [deadline] cfq

If not execute the following

$ sudo echo deadline > /sys/block/sda/queue/scheduler

References